Chap 5: Semantics

Lexical semantics

Lexical semantics is the study of word meaning. Two issues of

demarcation are relevant in this respect. First, although the definition

of lexical semantics may suggest otherwise, morphological semantics

(the study of the meaning of complex words as a function of the meaning

of their parts and the way they are constructed) is usually considered a

separate field from lexical semantics proper.

Word senes

The meaning of a lemma can vary enormously given the context. Consider these two uses of the lemma bank, meaning something like ‘financial institution’ and ‘sloping mound’, respectively:

- Instead, a bank can hold the investments in a custodial account in the client’s name.

- But as agriculture burgeons on the east bank, the river will shrink even more.

We represent some of this contextual variation by saying that the lemma bank has two senses. A sense (or word sense) is a discrete representation of one aspect of the meaning of a word. Loosely following lexicographic tradition, we will represent each sense by placing a superscript on the orthographic form of the lemma as in bank1 and bank2.

Relations between senses

Synonymy and Antonymy

When the meaning of two senses of two different words (lemmas) are identical or nearly identical we say the two senses are synonyms. Synonyms include such pairs as:

- couch/sofa

- vomit/throw up

- filbert/hazelnut

- car/automobile

Synonyms are words with identical or similar meanings. Antonyms, by contrast, are words with opposite meaning, such as the following:

- long/short

- big/little

- fast/slow

- cold/hot

- dark/light

- rise/fall

- up/down

- in/out

Another groups of antonyms is reversives, which describe some sort of change or movement in opposite directions, such as rise/fall or up/down.

Taxonomic Relations

Another way word senses can be related is taxonomically. One sense is a hyponym of another sense if the first sense is more specific, denoting a subclass of the other.

For example, car is a hyponym of vehicle; dog is a hyponym of animal, and mango is a hyponym of fruit.

Conversely, we say that vehicle is a hypernym of car, and animal is a hypernym of dog.

It is unfortunate that the two words (hypernym and hyponym) are very similar and hence easily confused; for this reason the word superordinate is often used instead of hypernym

We can define hypernymy more formally by saying that the class denoted by the superordinate extensionally includes the class denoted by the hyponym.

Thus the class of animals includes as members all dogs, and the class of moving actions includes all walking actions.

Hypernymy can also be defined in terms of entailment. Under this definition, a sense A is a hyponym of a sense B if everything that is A is also B and hence being an A entails being a B, or ∀x A(x) ⇒ B(x). Hyponymy is usually a transitive relation; if A is a hyponym of B and B is a hyponym of C, then A is a hyponym of C.

Meronymy

Another very common relation is meronymy, the part-whole relation. A leg is part of a chair; a wheel is part of a car.

We say that wheel is a meronym of car, and car is a holoynm of wheel.

Structured Polysemy

The senses of a word can also be related semantically, in which case we call the relationship between them structured polysemy. Consider this sense bank:

The bank is on the corner of Nassau and Witherspoon.

This sense, perhaps bank4, means something like “the building belonging to a financial institution”. These two kinds of senses (an organization and the building associated with an organization) occur together for many other words as well (school, university, hospital, etc.).

Thus, there is a systematic relationship between senses that we might represent as BUILDING ↔ ORGANIZATION

This particular subtype of polysemy relation is called metonymy. Metonymy is the use of one aspect of a concept or entity to refer to other aspects of the entity or to the entity itself.

We are performing metonymy when we use the phrase the White House to refer to the administration whose office is in the White House.

Semantic Fields

There is a more general way to think about sense relations and word meaning. Where the relations we’ve defined so far have been binary relations between two senses, a semantic field is an attempt to capture a more integrated, or holistic, relationship among entire sets of words from a single domain.

Consider the following set of words extracted from the ATIS corpus:

reservation, flight, travel, buy, price, cost, fare, rates, meal, plane

WordNet: A Database of Lexical Relations

The most commonly used resource for sense relations in English and many other languages is the WordNet lexical database (Fellbaum, 1998). English WordNet consists of three separate databases, one each for nouns and verbs and a third for adjectives and adverbs; closed class words are not included.

Each database contains a set of lemmas, each one annotated with a set of senses. The WordNet 3.0 release has 117,798 nouns, 11,529 verbs, 22,479 adjectives, and 4,481 adverbs.

The average noun has 1.23 senses, and the average verb has 2.16 senses. WordNet can be accessed on the Web or downloaded locally. Figure G.1 shows the lemma entry for the noun and verb man.

Note that there are eleven senses (as noun and two as verb), each of which has a gloss (a dictionary-stylegloss definition), a list of synonyms for the sense, and sometimes also usage examples.

Synset

The set of near-synonyms for a WordNet sense is called a synset (for synonymsynset set); synsets are an important primitive in WordNet. The entry for man includes synsets like {man#1, adult male#1}, or {serviceman#1, military man#1, man#2, military personnel#2}. We can think of a

synset as representing a concept. Thus, instead of representing concepts in logical terms, WordNet represents them as lists of the word senses that can be used to express the concept. Here’s another synset

example:

{chump1, fool2, gull1, mark9, patsy1, fall guy1, sucker1, soft touch1, mug2}

The gloss of this synset describes it as:

Gloss: a person who is gullible and easy to take advantage of.

Supersenes

WordNet also labels each synset with a lexicographic category drawn from a semantic field for example the 26 categories for nouns shown in figure below, as well as 15 for verbs (plus 2 for adjectives and 1 for adverbs). These categories are often called supersenses, because they act as coarse semantic categories or groupings of senses which can be useful when word senses are too fine-grained. Supersenses have also been defined for

adjectives and prepositions.

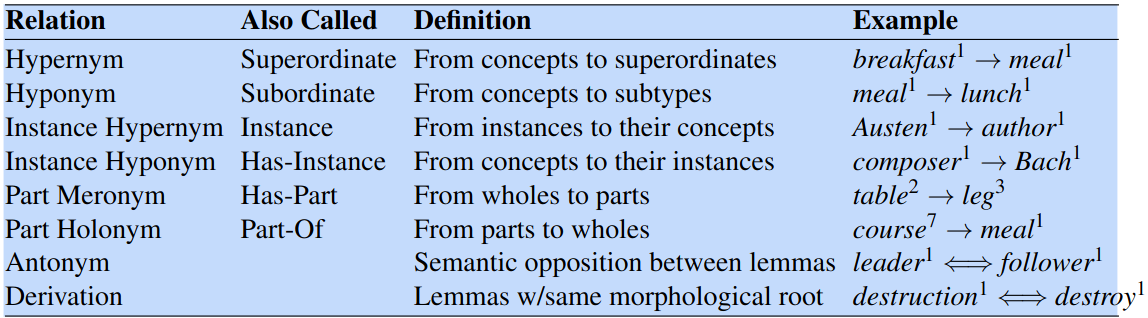

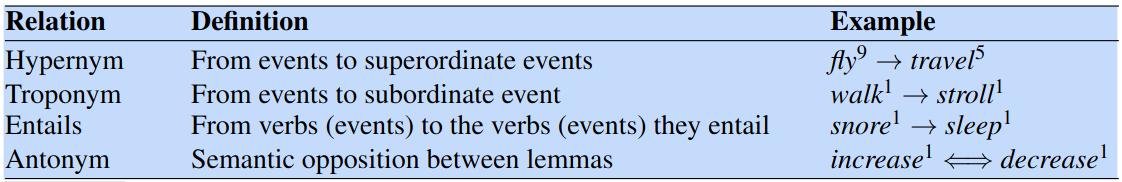

Sense Relations in WordNet

WordNet represents all the kinds of sense relations discussed in the previous section, as illustrated in figures bellow.

Some of the noun relations in WordNet.

For example WordNet represents hyponymy by relating each synset to its immediately more general and more specific synsets through direct hypernym and hyponym relations. These relations can be followed to produce longer chains of more general or more specific synsets.

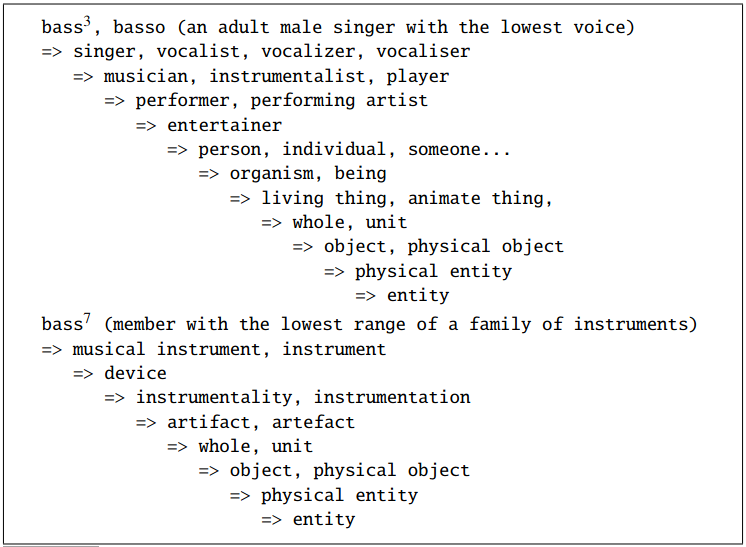

Figure below shows hypernym chains for bass3 and bass7; more general synsets are shown on successively indented lines.

Classes and instances

WordNet has two kinds of taxonomic entities: classes and instances. An instance is an individual, a proper noun that is a unique entity. San Francisco is an instance of city, for example. But city is a class, a hyponym of municipality and eventually of location. Figure below shows a subgraph of WordNet demonstrating many of the relations.

Algeria is an insrtance of African country

A subnet of WordNet

Arabic WordNet

Arabic WordNet is a lexical resource for Modern Standard Arabic based on the widely used Princeton WordNet for English. Arabic WordNet (AWN) is based on the design and contents of the universally accepted Princeton WordNet (PWN) and will be mappable straightforwardly onto PWN 2.0 and EuroWordNet (EWN), enabling translation on the lexical level to English and dozens of other languages.The designers developed and linked the AWN with the Suggested Upper Merged Ontology (SUMO), where concepts are defined with machine interpretable semantics in first order logic. They greatly extended the ontology and its set of mappings to provide formal terms and definitions for each synset.

AWN content

Compositional semantics

The general theory in compositional semantics: The meaning of a phrase is determined by combining the meanings of its subphrases, using rules which are driven by the syntactic structure.

The principle of compositionality

• In any natural language, there are infinitely many sentences , and the brain is finite. So, for syntax, linguistic competence must involve some finitely describable means for specifying an infinite class of sentences.

A speaker of a language knows the meanings of those infinitely many sentences, and is able to understand a sentence he/she hears for the first time. So, for semantics, there must also be finite means for specifying the meanings of the infinite set of sentences of any natural language.

• In generative grammar, a central principle of formal semantics is that the relation between syntax and semantics is compositional.

The principle of compositionality (Fregean Principle):

The meaning of a complex expression is determined by the meanings of its parts and the way they are syntactically combined.

The meaning of a sentence is the result of applying the unsaturated part of the sentence (a function) to the saturated part (an argument).

Understanding natural language

The prerequisite for any deeper understanding is the ability to identify the intended literal meaning.

- “eat sushi with chopsticks” does not mean that chopsticks were eaten

True understanding also requires the ability to draw appropriate inferences that go beyond literal meaning:

- Lexical inferences (depend on the meaning of words): You are running —> you are moving.

- Logical inferences (e.g. syllogisms): All men are mortal. Socrates is a man —> Socrates is mortal.

- Common sense inferences (require world knowledge): It’s raining —> You get wet if you’re outside.

- Pragmatic inferences (speaker’s intent, speaker’s assumptions about the state of the world, social relations): Boss says “It’s cold here” —> Assistant gets up to close the window.

In order to understand language, we need to be able to identify its (literal) meaning.

- How do we represent the meaning of a word? (Lexical semantics)

- How do we represent the meaning of a sentence? (Compositional semantics)

- How do we represent the meaning of a text? (Discourse semantics)

Simple semantics in feature structures

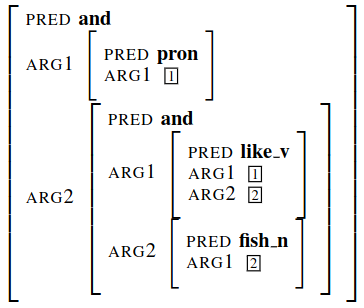

The grammar fragment below is based on the feature structures. It is intended as a rough indication of how it is possible to build up semantic representations using feature structures.

The lexical entries have been augmented with pieces of feature structure reflecting predicate-argument structure. With this grammar, the FS for they like fish will have a SEM value of:

This can be taken to be equivalent to the logical expression pron(x) ∧ (like v(x, y) ∧ fish n(y)) by translating the reentrancy between argument positions into variable equivalence.

The most important thing to notice is how the syntactic argument positions in the lexical entries are linked to their semantic argument positions. This means, for instance, that for the transitive verb like, the syntactic subject will always correspond to the first argument position, while the syntactic object will correspond to the second position.